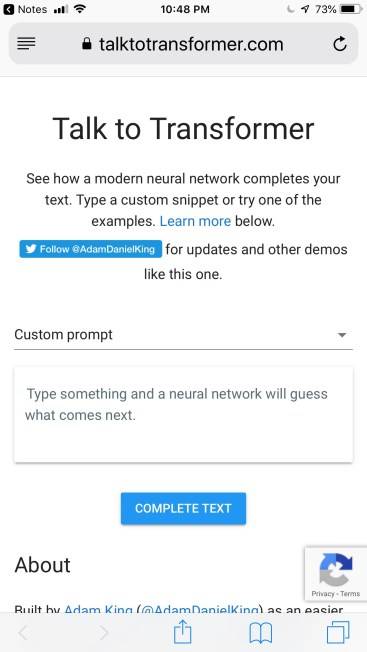

Talk To Transformer is an interesting online tool, built by Adam Daniel King and is available online on the eponymous website. It asks for the beginning of a sentence and automatically guesses the words to follow within a fraction of a second. It uses state-of-the-art GPT (Generative Pretrained Transformer) that can perform common Natural Language Processing operations upon any piece of text.

The exciting thing about this project is that the GPT-2 model was made available by the developers, while ensuring its weights and biases are optimized by itself to generate an understanding of the text written naturally by human beings. This has given the model the ability to predict the rest of a sentence when fed with just the beginning. In fact, it can deliver a convincing result even with a single word. Such a thing clearly demarcates it from the knowledge engineering products which were previously employed, namely, asynchronous database queries which simply looked for exact matches in the history of its experience.

In the bigger picture, this project is a step forward in the direction of having a fully automated AI that is capable of conversing with human beings using natural language upon topics belonging to a vast majority of genres.

Table of Contents

What is GPT-2?

As mentioned above, GPT stands for Generative Pretrained Transformer, and GPT-2 is an improved version of its predecessor GPT. The transformer is a neural network architecture, which is complicated to build and maintain. GPT-2 packages this complex model into an easy to use Application Programming Interface (API) so that the companies and developers can focus only on the creative purposes and need not spend countless hours fine-tuning the algorithm. The fact that it is generative, means that it’s capable of creating a text which it has never seen before based upon the data it has been fed. It is also predictive as it can read the given input, do some sentiment analysis and come up with a possible decision about how to complete the sentence in a meaningful way. Being coupled with other pre-trained natural language processors, it’s able to build upon the abilities of these NLPs and thus stand upon the shoulders of the giants to generate textual data in any format or flavor that the user chooses.

The inspiration behind Talk To Transformer

In simple words, Talk To Transformer is a bot that uses artificial intelligence and NLP to complete the sentences. The bot has been created with the use of PyTorch GPT-2 model. It has been possible because of the availability of cheap-yet-powerful computational power via cloud computing. The team that created the GPT-2 model combined it with pre-trained language translation models. Thankfully, the two of them worked well together and allowed the developers to utilize internet text written in multiple languages as an input to train the model.

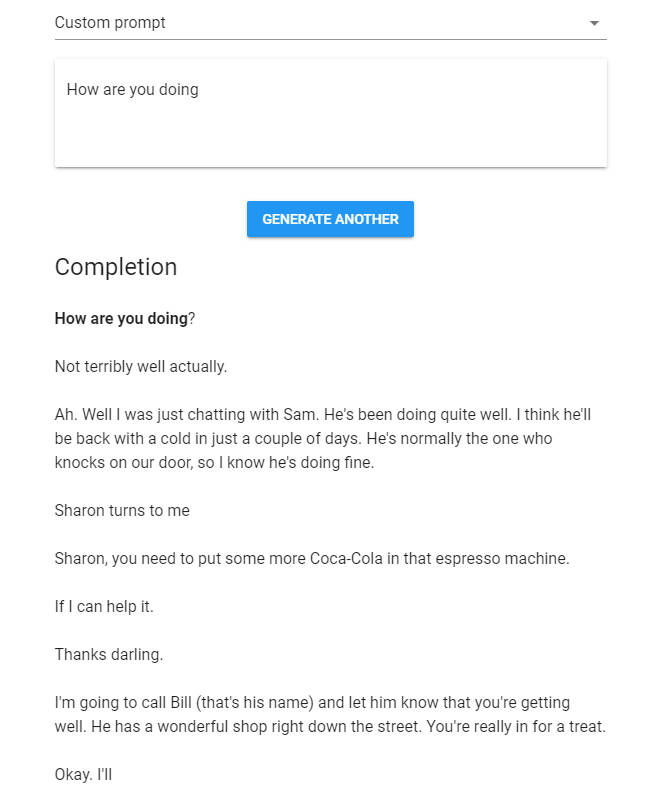

How to use Talk to Transformer?

Using Talk to Transformer is fairly easy as you simply need to write a word, sentence or paragraph in the text field, and then follow which writing style you want. The options include Elon Musk’s debut album, Unicorns that speak English, Sci-fi, Cooking instructions and more. And that’s it, the website will automatically generate text basis that. And if you want Talk To Transformer longer, then simply cut the last few lines generated by the tool, and paste them into the text field. Truth be told, you could keep doing this until you get bored.

So what can Talk To Transformer be used for?

Such a tool has immense scope of application across the internet. It can be used to auto-complete questions and doubts raised by any user in a forum and auto-suggest answers directly to them which can speed up the process of troubleshooting. Obviously, GPT-2 has made a name for itself as a glorified text generator that generates text based on the context offered by the input. The same ability allows it to be used as a standalone text analyzer. When coupled with speech recognition software and text-to-speech engines, it can help create an application that could potentially compete with Siri, Alexa, and the likes. When employed as a bot, such an application can help gather useful information from the user, and thus streamline the data collection process. This could further be useful for several industries, such as healthcare, where users can get suggestions for simple heath issues, while critical issues could be routed to the relevant professionals.

Possible dangers of using Talk To Transformer

Unless you have been living under a rock, you might have already come across the concerns raised by things such as deepfake. Interviews of the 44th US President Barack Obama were generated in recent times using such models in which both the audio and visual feed were fabricated by an AI algorithm. A simple look at the internet and you’ll see some malicious applications of deepfake technology.

Such stories have forced the developer community to invest equal amounts of time in developing a tool that can detect such fake creations and alert the users to prevent the spread of fake news. Since the generation of textual, is a lot easier than the generation of realistic audio-video feed, the GPT-2 model was initially quite feared for being misused. In fact, the OpenAI GPT-2 was initially dubbed as an AI that was too dangerous to release. For, it was an open-source project meaning that anyone in the world could use it to build products they fancy. Not being strictly regulated by any central authority has turned out to be a source of worry for the enthusiasts as they fear the misuse of this incredibly useful tool for the propagation of rumors and misinformation. To see an example of the same, check out News you can’t use, a fake news generation website that uses such models to create news, which seems quite realistic.

The future of GPT-2 and Talk to Transformer

Talk to Transformer is hosted online on a cloud supplied by Lambda. The offerings include servers with strong GPUs that are specially adapted to handle the repetitive loops usually involved in a machine learning algorithm. The fact that a developer or a team needn’t buy expensive hardware to watch their ideas come to life suggests that we could see more of such interesting projects that push the boundaries of machine learning.

In fact, another interesting GPT-based project is AI Dungeon 2, which is a choose-your-own-adventure style text-based game. You can select from the five genres: fantasy, mystery, apocalyptic, zombie, and custom, and then play with it. The game gives you prompts, and you can reply as per the options provided, and continue from there. The makers of the game have combined Generative Pretrained Transformer-2 with data from ChooseYourStory.com – a website that has a dedicated community of people writing fiction.

There’s also HuggingFace’s Write with Transformer that’s a Talk To Transformer alternative and built on a similar premise and lets you use various models, including GPT-2. However, it only completes a line and doesn’t give you an entire paragraph.

GPT-2 has already received an upgrade in the form of GPT-3, which brings us to…

What is GPT-3?

Introduced by Open AI foundation, GPT-3 is one of the most recent additions to the AI world that came into the market in June 2020 and. The successor to the GPT-2 is superior in many aspects as the number of parameters it contains is alarmingly high with 175 billion (compared to 1.5 billion parameters).

These parameters act as network calculators that apply specific weightage to various aspects of the data. So, every data gets a weightage and perspective. Furthermore, GPT-3 needs no training to complete work due to the presence of massive amounts of parameters. As an efficient text generation tool, it’s able to write multiple things perfectly on its own like blogs, ads, stories, and technical stuff. However, the controllers need to input the purpose and preferable writing style to get a perfect outcome.

The level of intelligence makes GPT-3 an efficient idea generator. It can cut down human effort in fine-tuning various textual algorithms. It can also carry out technical jobs like coding, billing, and receptionist.

Closing thoughts

Great achievements come with perseverance, and to complete this ingenious project, OpenAI had to make the model skim through 40 gigabytes of non-binary plaintext data. Several comparisons have been made to explain the true size of this training data. Mainly, such comparisons focus upon the fact that the entire Sherlock Holmes collection can fit within just a few megabytes of data and the complete lifetime works of Shakespeare would hardly exceed 5 megabytes. The longest completed fiction novel series in the world, The Wheel of Time by Robert Jordan and Brandon Sanderson which has 4.4million words fails to constitute even 1 percent of the entire input. These examples illustrate how much the model was trained, and how robust it is.

In sum, Talk To Transformer AI is certainly quite impressive. Most of the people visiting the website and trying out the project for the first time are astonished by its efficiency and ability. It also points towards the fact that just like a human being, auto-generated text, too, can be serious, funny, instructive, etc. It greatly inspires machine learning and artificial intelligence enthusiasts to build similar tools and perhaps even monetize their services. While there certainly are potential misuses of this technology, we are quite excited to see how it evolves in the future.

1 Comment

https://waterfallmagazine.com

Great post. I was checking continuously this blog and I’m impressed!

Very useful information specially the last part 🙂 I care for such info much.

I was looking for this particular information for a very long time.

Thank you and good luck.